There are so many ways to extract keywords from Google that sometimes we need to know the best method. One of the most helpful ones is using SerpApi to extract People Also Ask questions.

In this post, I will walk you through the process of getting questions and real user insights by using two main tools:

- Google Colab.

- SerpApi.

Why is getting questions from Google relevant to your SEO strategy? For many reasons, especially these:

- You get direct feedback on what people are looking for on the topic you are doing the SEO strategy.

- It allows you to grab many ideas to build content calendars.

- It’s a powerful way to create internal linking strategies since you find related topics or questions for your blog posts.

- Using these keywords makes it easier to build high-impact page structures from scratch.

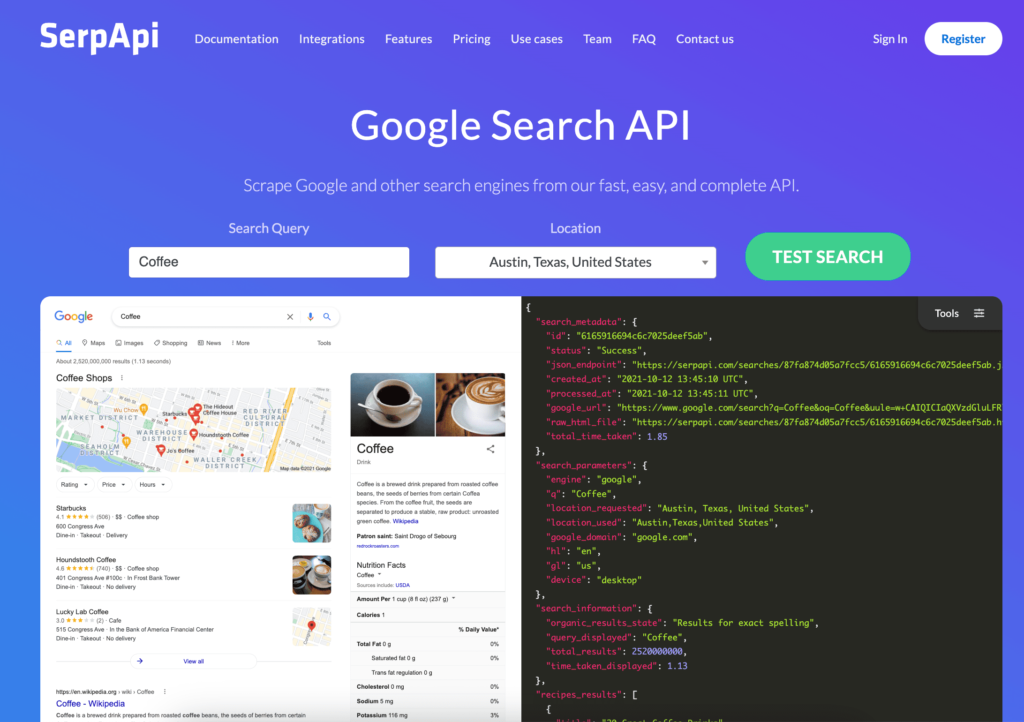

Step by step to create your SerpApi account

- Go to SerpApi and look for the “Register” button on the top menu:

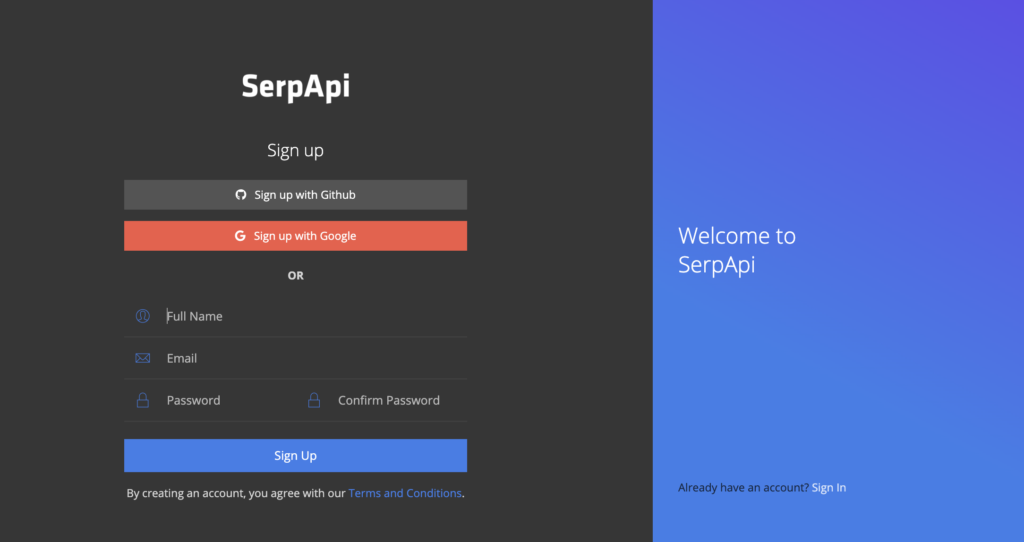

- Click “Register,” and you will see two ways to create your account.

- The first one is by logging in with your email account (The easiest one)

- The second one is by introducing your personal information.

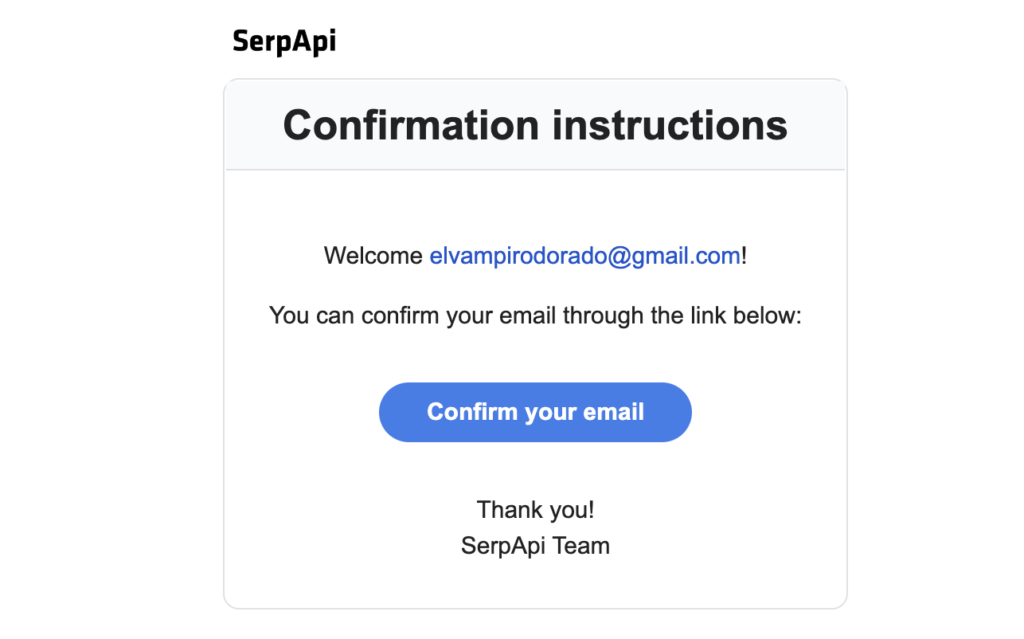

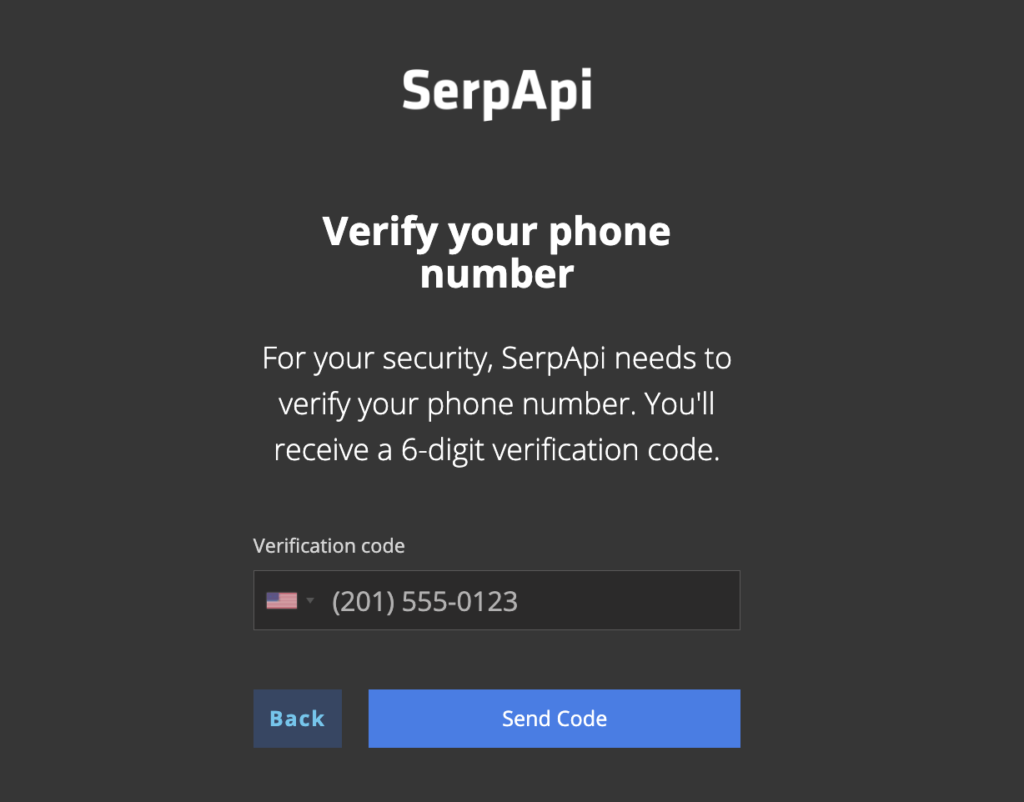

- The app will ask you to verify your account by completing a two-step process:

- Email verification: you will get an email with a link to verify the property.

- Cellphone verification: You can use any number to receive a 6-digit code.

- After verification, you are set to start using the SerpApi app. The app will redirect to a dashboard with your name, an API key, and the list of Google products available to extract data from.

Basic understanding of Search API to extract People Also Ask:

On the right, you will find a menu full of Google products, going from Google Search to more specialized databases such as Google Finance or Google Flights:

In this project, we’ll work with Google Search API, which has features similar to those we use to search for information or different products on Google.

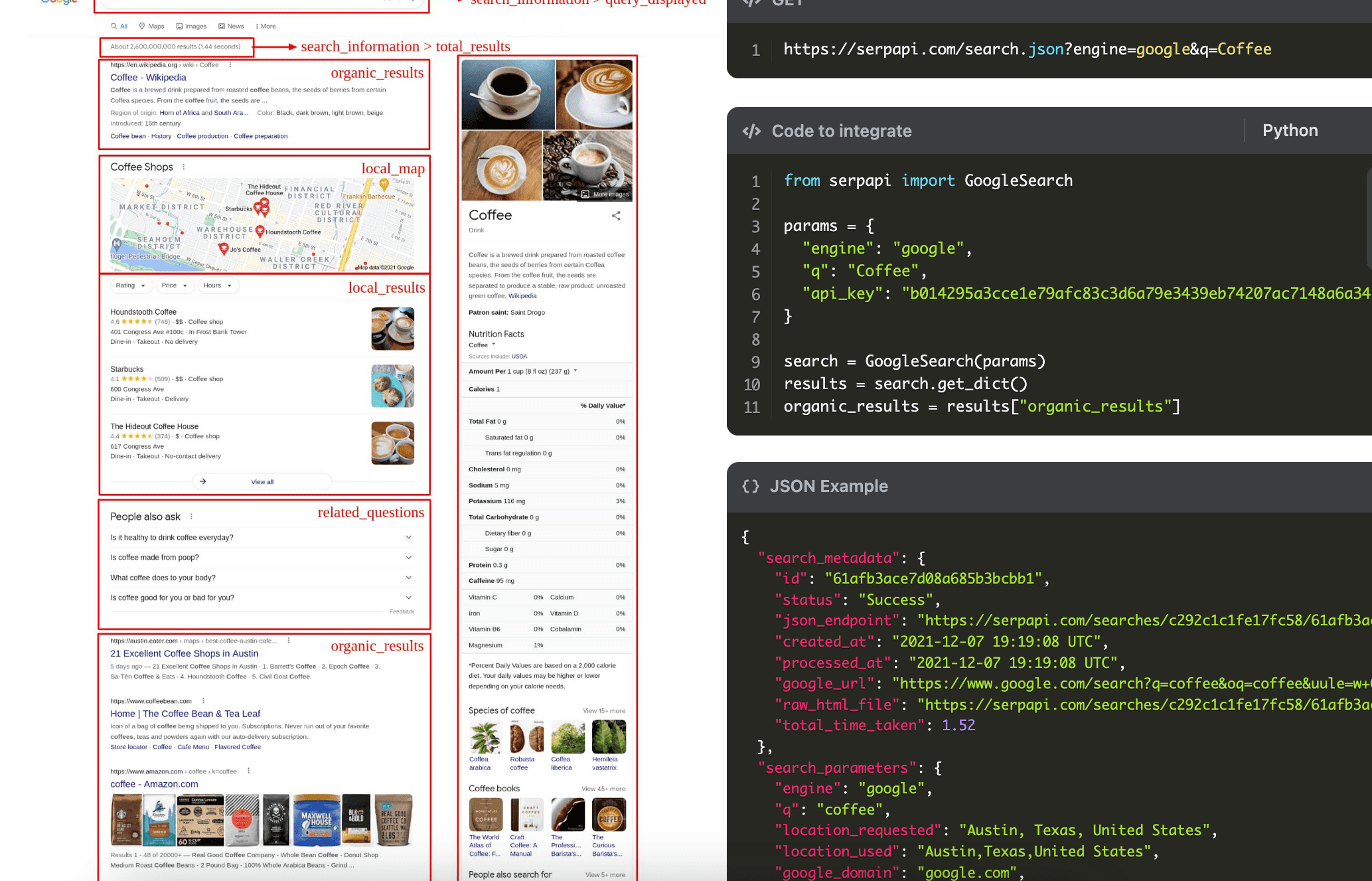

SerpApi shows us the main features or resources available if we use the API to extract information. You can see them highlighted in red on the left part of the image:

- Local map

- Local results

- Related questions

- Organic results

- Knowledge graph

- Related searches

To start using the app, SerpApi offers you a basic code to extract the results for one keyword:

from serpapi import GoogleSearch

params = {

"engine": "google",

"q": "Coffee",

"api_key": "your_api_key"

}

search = GoogleSearch(params)

results = search.get_dict()

organic_results = results["organic_results"]This code contains the basic structure you’ll need to fetch the information coming after Googling a keyword. As you see, the params object includes the indications for the search:

- Engine: Google, in this case.

- Q: It applies to the keyword you are looking for on Google.

- Location: you can specify the city and the country to perform a more specific search.

- Hl: the language you are interested in.

- Gl: the country.

- Google domain: You can use different versions: google.com, google.fr, google.es.

- Num: This number is used for paginations. The default value is 0, which will fetch 10 results; 10 will search for the second-page results, 20 for the third page or results, etc.

- Start: The number of results you are expecting to get (the first 10, 20, 30 results from the SERPs)

- Safe: This optional parameter allows you to control the level of filtering adult content. It can be set to “active” or “off”.

- Api_key: This is the private key you receive once you create your SerpApi account. You can find it in the API main dashboard.

The first version to fetch People Also Ask questions:

- After creating your notebook in Google Colab, you need to run and install the following libraries:

!pip install google-search-results

import pandas as pd

import requests

from serpapi import GoogleSearch- !pip install google-search-results

- import pandas as pd

- import requests

- from serpapi import GoogleSearch

- After you run the libraries, you’ll get an output like this:

- After installing the libraries and the dependencies, we have to define in variables the Serp API key and the variables related to the country, language, and the specific search engine:

params = {

"keyword": "Colombian food",

"google_domain": google.com,

"hl": en,

"gl": us,

"api_key": api_key,

}- The second part of the code will have the keyword we want to consult and the functions for the data extraction.

Keyword = 'colombian food'

busqueda = GoogleSearch(params)

fetch_data = busqueda.get_dict()

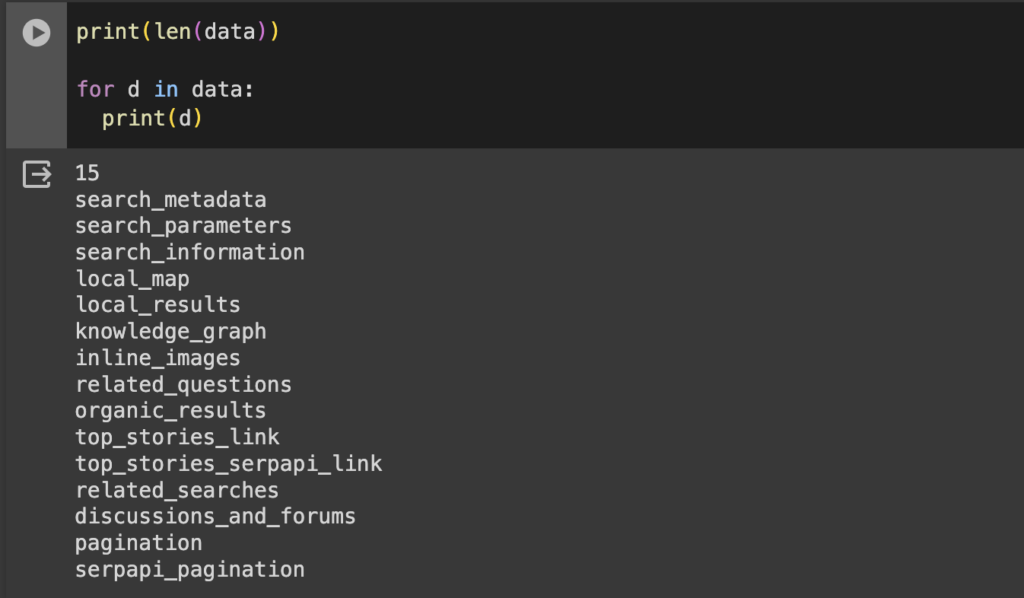

data = fetch_data- After we successfully fetch and get the data, we do a for loop to know how many variables the list has. In this case, we need to get the People Also Ask questions from relatedes_questions:

Now that we know the list we will use, I want to check the information that related_questions has. If we do a for loop to iterate through every value. We’ll do it just using the first dictionary of the related_questions since every dictionary has the same variables;

people_also_ask = data['related_questions']

primer_dict = people_also_ask[0]

for dictionary in primer_dict:

print(dictionary)As we see in the image, eight variables come from the related_questions. For this purpose, it is helpful to extract four variables: question, snippet, title, and link.

To do that, we first need to store the variables we want to get in a list.

variables = ['question', 'snippet', 'title', 'link']Then, we’ll iterate through to the list people_also_ask to extract the desired variables, and then we’ll push that information into list_question:

list_question = []

for item in people_also_ask:

filtered_dict = {variable: item[variable] for variable in variables}

list_question.append(filtered_dict)Finally, to show the information nicely, we’ll use a simple data frame with this code:

df = pd.DataFrame(list_question)

pd.set_option('display.max_colwidth', None)

dfHere are the four questions extracted from Google when someone searches for information related to “Colombian food.”

I hope you find this resource helpful for extracting questions and content to improve your blog post, internal linking, or content calendar strategy.

I am a skilled and self-motivated marketing and SEO professional with 11+ years of experience in different digital marketing areas: content planning, information architecture, and primarily creating, leading, and tracking SEO strategies. In the last six years, I have specialized my career in developing processes to redesign and launch websites successfully using Hubspot’s Growth-Driven Design methodology.

Leave a Reply